[Kubernetes] Kubeadm 安装 Kubernetes

使用 Kubeadm 工具在集群上安装 Kubernetes.

UPDATE 2020/05/15:

- 修改排版

1.开始之前

- 系统环境:

- Ubuntu 16.04+

- Debian 9+

- CentOS 7

- Red Hat Enterprise Linux (RHEL) 7

- Fedora 25+

- HypriotOS v1.0.1+

- Container Linux (tested with 1800.6.0)

- RAM >= 2GB

- 2 CPUs or more

- 确保集群之间可以互相连通

- 确保hostname、MAC地址不重复

- 确保关闭交换分区

- 确保可以连接到镜像网站(外网)

不要使用nftables

禁用nftables

由于 Kubernetes 不支持 nftables,如果已启用 nftables,请先将 nftables 换回 iptables

参考:

1

2

3

4$ sudo update-alternatives --set iptables /usr/sbin/iptables-legacy

$ sudo update-alternatives --set ip6tables /usr/sbin/ip6tables-legacy

$ sudo update-alternatives --set arptables /usr/sbin/arptables-legacy

$ sudo update-alternatives --set ebtables /usr/sbin/ebtables-legacy

端口检查

- 控制节点

| Protocol | Direction | Port Range | Purpose | Used By |

|---|---|---|---|---|

| TCP | Inbound | 6443 | Kubernetes API server | All |

| TCP | Inbound | 2379-2380 | etcd server client API | kube-apiserver, etcd |

| TCP | Inbound | 10250 | Kubelet API | Self, Control plane |

| TCP | Inbound | 10251 | kube-scheduler | Self |

| TCP | Inbound | 10252 | kube-controller-manager | Self |

- 工作节点

| Protocol | Direction | Port Range | Purpose | Used By |

|---|---|---|---|---|

| TCP | Inbound | 10250 | Kubelet API | Self, Control plane |

| TCP | Inbound | 30000-32767 | NodePort Services | All |

时钟同步

具体请看这篇文章 post.

修改hostname

1 | $ vim /etc/hostname # 修改hostname |

修改后, 两台虚拟机的配置如下:

1 | # in k8s-master |

确认MAC和product_uuid的唯一性

文档链接: Verify the MAC address and product_uuid are unique for every node

1 | [root@k8s-master ~]$ ifconfig -a # 查看MAC |

注: 如果你的centos7没有ifconfig命令, 可以执行yum install net-tools进行安装.

配置防火墙

文档链接: Check required ports

由于是本地内网测试环境, 笔者图方便, 直接关闭了防火墙. 若安全要求较高, 可以参考官方文档放行必要端口.

1 | [root@k8s-master ~]$ systemctl stop firewalld # 关闭服务 |

禁用SELinux

修改/etc/selinux/config, 设置SELINUX=disabled. 重启机器.

1 | [root@k8s-master ~]$ sestatus # 查看SELinux状态 |

禁用交换分区

文档链接: Before you begin

Swap disabled. You MUST disable swap in order for the kubelet to work properly.

编辑/etc/fstab, 将swap注释掉. 重启机器.

1 | # 注意如果出现没有w权限的情况,请手动打开权限,并在修改之后恢复 |

UPDATE 2020/05/17

尽管按照上述方法操作,但是我多次发现在设备重启之后,交换分区又重新回来了,采用更加激进的方法

2

3

4

5

6

7

8

# 修改 fstab 并注释任何交换条目

$ vim /etc/fstab

# 斩草不除根,春风吹又生

# 确保在机器重启之后,交换分区不会被自动挂载(这种情况在我的设备上出现过很多次了)

$ sudo systemctl mask dev-sdXXX.swap

安装Docker

Docker官方文档对安装步骤描述已经足够详细, 过程并不复杂, 本文便不再赘述.

- Docker请使用

18.09, k8s暂不支持Docker最新版19.x, 安装时请按照文档描述的方式明确指定版本号yum install docker-ce-18.09.9-3.el7 docker-ce-cli-18.09.9-3.el7 containerd.io. - 若网络不好, 可换用国内源, 阿里云、中科大等都可. 此处附上阿里云源docker安装文档地址: 容器镜像服务.

- 安装完毕后, 建议将docker源替换为国内. 推荐阿里云镜像加速, 有阿里云账号即可免费使用.

阿里云 -> 容器镜像服务 -> 镜像中心 -> 镜像加速

配置Docker

文档地址: Container runtimes

修改/etc/docker/daemon.json为如下内容:

1 | { |

- 其中

https://xxxxxxxx.mirror.aliyuncs.com为阿里云镜像加速地址,xxxxxxxx需要替换为自己账户中的地址.

安装配置完毕后执行:

1 | [root@k8s-master ~]$ systemctl enable docker |

安装 kubeadm, kubelet, kubectl

kubeadm 为社区官方推出的 Kubernetes 部署工具,极大程度上降低了部署的成本。以下为相关命令:

kubeadm: 集群部署工具的命令;kubelet: 该命令可以在集群的所有节点上运行,用于运行 pod 与 container ;kubectl: 集群控制命令,用于在控制节点上控制整个系统的运行。

首先需要安装三个工具,并且将它们的版本进行锁定,防止后期使用 apt 更新时导致各组件之间版本不相容,引起错误。

version skew

1 | $ sudo apt-get update && sudo apt-get install -y apt-transport-https curl |

开始安装 Kubernetes

$ kubeadm config print init-defaults > init.default.yaml获取默认配置文件;cp init.default.yaml init-config.yamlvim init-config.yaml自定义相关属性,相关配置信息可以查阅 kubeadm documents. 我修改完的文件如下:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.1.251

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: k8s-master

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.17.0

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

scheduler: {}优先运行

$ kubeadm config images pull确保你可以正常的连接上gcr.io,如果你碰到了一些问题,可以看看 Troubleshooting 1 ;kubeadm init开始安装,会出现以下提示:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22root@openstack1:~/kubernetes# kubeadm init --config=init-config.yaml

[init] Using Kubernetes version: v1.17.0

[preflight] Running pre-flight checks

[WARNING KubernetesVersion]: Kubernetes version is greater than kubeadm version. Please consider to upgrade kubeadm. Kubernetes version: 1.17.0. Kubeadm version: 1.16.x

···

···

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.1.251:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:0a77d9dc7d5fd833203211767a869324e512222540f9bbf0e70cb4bb87d981c0之后按照给出的提示,创建本地 Kubernetes 的配置项:

1

2

3$ mkdir -p $HOME/.kube

$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

$ sudo chown $(id -u):$(id -g) $HOME/.kube/configkubeadm 在 Master 上也安装了 kubelet,在默认情况下 Master 不参与工作负载,如果你此时想安装一个All-In-One 的 Kubernetes 环境,可以执行下面的命令, 让Master成为一个Node:

1

2# 删除Node的Label "node-role.kubernetes.io/master"

$ kubectl taint nodes --all node-role.kubernetes.io/master-

网络插件

网络插件用于在集群中构建一层虚拟网络,用于 pods 之间的通信。注意:必须在安装网络插件之后,pods之间才能互相通信。常见的网络插件包括 Weave Net、Calico等,以下介绍这两种的安装使用。

The network must be deployed before any applications. Also, CoreDNS will not start up before a network is installed. kubeadm only supports Container Network Interface (CNI) based networks (and does not support kubernet)

Weave Net

原文:

Set

/proc/sys/net/bridge/bridge-nf-call-iptablesto1by runningsysctl net.bridge.bridge-nf-call-iptables=1to pass bridged IPv4 traffic to iptables’ chains. This is a requirement for some CNI plugins to work, for more information please see here.The official Weave Net set-up guide is here.

Weave Net works on

amd64,arm,arm64andppc64lewithout any extra action required. Weave Net sets hairpin mode by default. This allows Pods to access themselves via their Service IP address if they don’t know their PodIP.

为了使 Kubernetes 集群的网络接口 CNI 工作正常,需要首先运行,将 /proc/sys/net/bridge/bridge-nf-call-iptables 的值设为 1 :

1 | $ sysctl net.bridge.bridge-nf-call-iptables=1 |

接下来需要根据物理机的架构选择对应的版本进行安装:

1 | kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')" |

安装完成后,即可通过服务名 IP 而非 Pod IP 对服务进行访问了。

calico

下载描述文件

1 | [root@k8s-master ~]$ wget https://docs.projectcalico.org/v3.8/manifests/calico.yaml |

打开calico.yaml, 将192.168.0.0/16修改为10.96.0.0/12

需要注意的是, calico.yaml中的IP和kubeadm-init.yaml需要保持一致, 要么初始化前修改kubeadm-init.yaml, 要么初始化后修改calico.yaml.

执行kubectl apply -f calico.yaml初始化网络.

此时查看node信息, master的状态已经是Ready了.

1 | [root@k8s-master ~]$ kubectl get node |

注意:

如果需要卸载网络插件,注意除了删除对应的容器之外,还需要移除相应的虚拟网卡,否则下次安装时会出现问题。

Dashboard

文档地址: Web UI (Dashboard)

部署Dashboard

可以通过命令,按照官方模版创建一个dashboard

1 | $ kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta8/aio/deploy/recommended.yaml |

创建用户

文档地址: Creating sample user

由于安全机制,k8s 对用户的访问管理十分严格,此处的目的是为了创建一个用于登录Dashboard的用户,创建文件 dashboard-adminuser.yaml 内容如下:

1 | apiVersion: v1 |

执行命令kubectl apply -f dashboard-adminuser.yaml

访问dashboard 的方式

- kubernetes-dashboard 服务暴露了 NodePort,可以使用

http://NodeIP:nodePort地址访问 dashboard - 通过 kubectl proxy 访问 dashboard

- 通过 API server 访问 dashboard(https 6443端口和http 8080端口方式)

前两种方式可以参考此文,本文主要讲述第三种方式。

在~/.kube/路径下,执行

1 | grep 'client-certificate-data' ~/.kube/config | head -n 1 | awk '{print $2}' | base64 -d >> kubecfg.crt |

第三行命令会要求输入密码,记住你此时输入的密码,将会得到一个kubecfg.p12文件,将其拷贝到将要访问dashboard 的主机上(用scp),在该主机上点击打开证书文件,用刚才的密码解锁,并导入到计算机中。

加下来访问http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/

如果碰到页面显示

1 | { |

那么参考这个issue,在之前用来生成dashboard的recommend.yaml中加入

1 |

|

通过命令重新启动dashboard:kubectl replace --force -f recommended.yaml

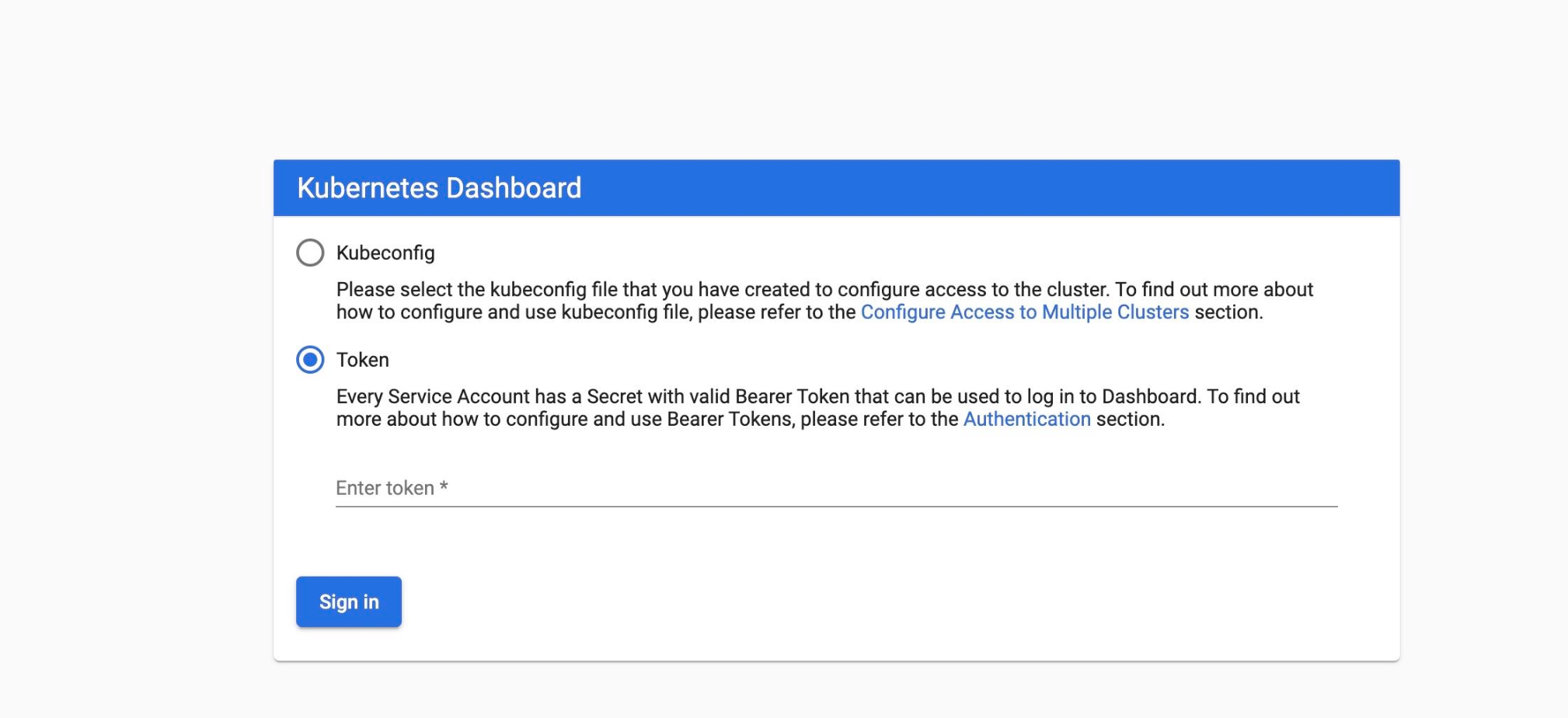

继续打开页面https://{k8s-master-ip}:6443/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/#/login 将会看到下图

此时需要使用Token登录

生成Token

执行以下命令获取Token.

1 | $ kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}') |

其他节点加入集群

其他机器加入cluster: 按照前文步骤,安装kubeadm, kubelet

关闭swap分区

进行2、3步操作

运行:

1

2$ kubeadm join 192.168.1.251:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:9c06556766e6dbf15385dc62b99e4f40d15b8412ca5bd853a79cbee1f14724f8(此处为前文命令提示的,此 token 非 Dashboard 中用于访问认证的 token)

如果你没有token,运行

kubeadm token list(在master上),可以查看token- 默认token在24小时后到期,如果token已经到期

kubeadm token create得到新token,或使用kubeadm token list查看token获取ca证书sha256编码hash值

1 | openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //' |

卸载集群

To undo what kubeadm did, you should first drain the node and make sure that the node is empty before shutting it down.

Talking to the control-plane node with the appropriate credentials, run:

1 | $ kubectl drain <node name> --delete-local-data --force --ignore-daemonsets |

Then, on the node being removed, reset all kubeadm installed state:

1 | $ kubeadm reset |

The reset process does not reset or clean up iptables rules or IPVS tables. If you wish to reset iptables, you must do so manually:

1 | $ iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X |

If you want to reset the IPVS tables, you must run the following command:

1 | $ ipvsadm -C |

If you wish to start over simply run kubeadm init or kubeadm join with the appropriate arguments.

More options and information about the kubeadm reset command

1 | Failed to create pod sandbox: rpc error: code = Unknown desc = [failed to set up sandbox container "2fd3d89066e8990021729afcb5b209dc5246ce1cbba6ee16b7242bdbef1dfc66" network for pod "calico-kube-controllers-778676476b-rrwsh": networkPlugin cni failed to set up pod "calico-kube-controllers-778676476b-rrwsh_kube-system" network: error getting ClusterInformation: resource does not exist: ClusterInformation(default) with error: clusterinformations.crd.projectcalico.org "default" not found, failed to clean up sandbox container "2fd3d89066e8990021729afcb5b209dc5246ce1cbba6ee16b7242bdbef1dfc66" network for pod "calico-kube-controllers-778676476b-rrwsh": networkPlugin cni failed to teardown pod "calico-kube-controllers-778676476b-rrwsh_kube-system" network: error getting ClusterInformation: resource does not exist: ClusterInformation(default) with error: clusterinformations.crd.projectcalico.org "default" not found] |